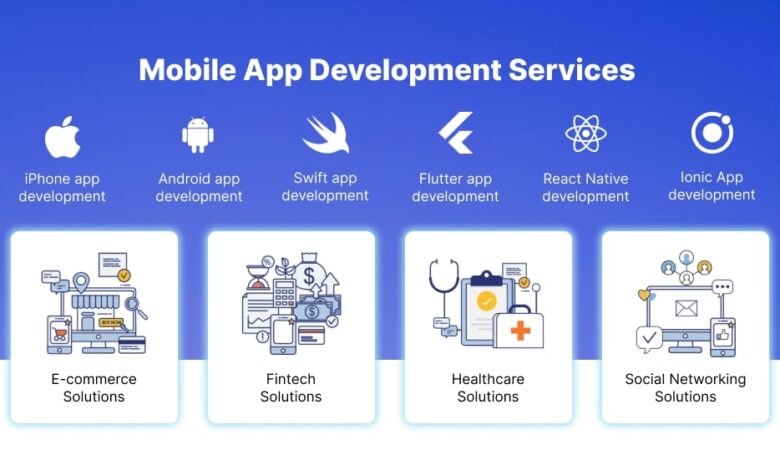

Mobile App Development Services for Managing High-Concurrency User Environments

High concurrency isn’t about handling many users; it’s about handling many users simultaneously without anyone noticing.

When millions of users tap “refresh” at the same moment, your app either scales gracefully or becomes a cautionary tale. Think of the last time a major app crashed during a product launch, sporting event, or breaking news.

Those failures represent more than technical problems; they’re trust-breaking moments that users remember. Enterprise mobile app development services for high-concurrency environments have evolved from luxury to necessity as user expectations collide with the reality of global, instantaneous demand.

Understanding High-Concurrency Challenges in Mobile Applications

Concurrent user load patterns and traffic spikes

Traffic patterns in mobile apps resemble earthquakes more than gentle waves. A viral post sends thousands of users flooding in within seconds. Push notifications trigger simultaneous opens across millions of devices. Time-zone-based peaks create rolling tsunamis of traffic that circle the globe.

These spikes arrive without warning. Your app might cruise along serving hundreds of users per second, then suddenly face hundreds of thousands. Black Friday, breaking news, viral content, or simply being featured in an app store can multiply traffic by orders of magnitude within minutes.

Resource contention and bottleneck identification

When thousands of users access the same data simultaneously, systems break in unexpected ways. Database locks create cascading delays. Memory fills faster than garbage collection can free it. Network connections exhaust available ports. CPU cores max out while other resources sit idle.

Bottlenecks hide in surprising places. A logging system that handles normal traffic might collapse under load. Third-party APIs that promise unlimited calls actually throttle after certain thresholds. Even successful operations can fail when success means updating millions of cache entries simultaneously.

Scalability limitations in traditional mobile architectures

Traditional three-tier architectures crumble under extreme concurrency. Monolithic backends can’t scale horizontally without massive refactoring. Stateful sessions prevent load distribution across servers. Synchronous processing creates chains of waiting that multiply delays.

Mobile-specific constraints compound these problems. Varying network conditions mean some users experience millisecond latency while others face multi-second delays. Device diversity means your app runs on everything from flagship phones to budget devices from five years ago.

Performance degradation under high-load conditions

Performance doesn’t degrade linearly; it falls off cliffs. Response times might remain steady up to 10,000 concurrent users, then suddenly spike to unusable levels at 10,100. This non-linear behavior makes capacity planning challenging and failures catastrophic.

User experience suffers before systems actually fail. Slow responses frustrate users into repeatedly tapping buttons, creating additional load. Timeouts trigger retries that compound problems. What starts as slight slowness cascades into complete unavailability.

High-Concurrency Architecture Fundamentals

Scalable Backend Infrastructure Design

Microservices architecture distributes load across independent services that scale individually. User authentication might need different scaling than payment processing. Product catalogs require different optimization than recommendation engines. This granular scaling ensures resources go where needed.

Event-driven architecture decouples components through asynchronous messaging. Instead of waiting for responses, services fire events and continue processing. This approach prevents cascading delays and enables parallel processing of independent operations.

CQRS separates read and write operations into different models. Since reads vastly outnumber writes in most applications, optimizing them separately improves performance.

Load Balancing and Traffic Distribution

- Application Load Balancers: Distribute traffic based on content, routing specific requests to specialized servers

- Geographic Distribution: Place servers near users to reduce latency and distribute load across regions

- Circuit Breakers: Prevent cascading failures by stopping calls to failing services and providing fallback responses

- Auto-scaling Policies: Automatically add or remove resources based on metrics like CPU usage, request rate, or queue depth

- Health Checks: Continuously monitor server health and route traffic away from struggling instances

Mobile-Specific Concurrency Optimization Strategies

Client-Side Performance Optimization

Connection pooling reduces overhead by reusing existing connections rather than establishing new ones for each request. Persistent connections maintain open channels for frequent communication. These techniques prove especially valuable on mobile networks where connection establishment costs more than on wired networks.

Request batching combines multiple operations into single network calls. Instead of fetching user profile, preferences, and history separately, batch them together. This reduces network overhead and battery consumption while improving perceived performance.

Intelligent caching stores frequently accessed data locally, eliminating network calls entirely. Predictive caching anticipates user needs and preloads likely-needed data. Cache invalidation strategies ensure data freshness without unnecessary updates.

Network Efficiency and Protocol Optimization

HTTP/2 multiplexing allows multiple requests over single connections, reducing latency and overhead. Server push sends anticipated resources before clients request them. Header compression reduces bandwidth usage for repeated requests.

WebSocket connections enable real-time bidirectional communication without polling overhead. They prove essential for chat applications, live updates, and collaborative features. Connection management becomes critical as each WebSocket consumes server resources.

Compression algorithms reduce payload sizes significantly. JSON can compress by 80% or more. Images can use progressive loading to show low-quality versions immediately while high-quality versions load in background. These optimizations matter especially on mobile networks with data caps.

Database Design for High-Concurrency Mobile Apps

NoSQL and Distributed Database Solutions

MongoDB excels at handling varied document structures common in mobile apps. Replica sets provide redundancy and read scaling. These features handle concurrent access patterns which have the ability to disrupt old-school databases and their structure.

Cassandra thrives under write-heavy workloads common in mobile analytics and event logging. Its eventually consistent model trades immediate consistency for massive scalability. This trade-off works well for data where eventual consistency suffices.

Redis provides in-memory caching that handles millions of operations per second. Session storage, leaderboards, and real-time analytics benefit from its speed. Persistence options ensure data survives restarts while maintaining performance.

Real-Time Communication and WebSocket Management

WebSocket Scaling and Connection Management

WebSocket connections require careful management at scale. Each connection consumes memory and file descriptors. Servers have limits on concurrent connections. Load balancing WebSocket connections requires sticky sessions or distributed state management.

Connection state must persist across server failures and rebalances. Redis or similar stores maintain connection mappings. Heartbeat mechanisms detect dead connections before resources exhaust. Recovery protocols reconnect clients transparently after network disruptions.

Push Notification Optimization

Push notifications seem simple but become complex at scale. Sending millions of notifications simultaneously can overwhelm servers. Batching, queuing, and rate limiting prevent self-inflicted denial of service.

Platform differences between FCM and APNS require abstraction layers. Token management handles expired or invalid tokens. Analytics track delivery rates and user engagement. These systems must scale independently from main application servers.

Caching Strategies for High-Concurrency Applications

Multi-Layer Caching Architecture

- Browser Cache: Leverage HTTP headers to cache static assets in browsers, eliminating requests entirely

- CDN Layer: Distribute content globally through edge servers, reducing origin server load

- Application Cache: Store computed results in memory to avoid repeated calculations

- Database Cache: Cache query results to reduce database load for frequently accessed data

- Mobile App Cache: Store data locally on devices to enable offline functionality and reduce server calls

Cache Invalidation and Consistency

Cache invalidation remains one of computer science’s hardest problems. Time-based expiration works for some data but wastes resources for rarely-changing content. Event-based invalidation provides accuracy but requires sophisticated tracking.

Cache warming preloads frequently accessed data before users request it. This prevents cache misses during traffic spikes. Gradual warming prevents overwhelming systems during startup. Priority-based warming ensures critical data loads first.

Asynchronous Processing and Message Queuing

Message queues decouple producers from consumers, enabling independent scaling. RabbitMQ provides sophisticated routing with exactly-once delivery semantics. Amazon SQS offers serverless simplicity with automatic scaling.

Background job processing moves heavy operations away from request paths. Image processing, report generation, and notification sending happen asynchronously. Worker pools scale based on queue depth rather than request rate. Failed jobs retry automatically with exponential backoff.

API Gateway and Rate Limiting Services

API gateways provide single entry points that handle cross-cutting concerns. Authentication, rate limiting, and request routing happen before reaching application servers. This centralization simplifies implementation and ensures consistency.

Rate limiting protects services from overwhelming load. Token bucket algorithms provide burst capacity while maintaining average rates. Sliding windows ensure smooth rate enforcement. User-based limits prevent single users from consuming all resources while IP-based limits prevent distributed attacks.

Monitoring and Performance Analytics

Real-Time Monitoring Solutions

Application Performance Monitoring (APM) tools provide visibility into system behavior under load. They track response times, error rates, and resource usage. Distributed tracing follows requests across multiple services. These insights identify bottlenecks before they become critical.

Custom metrics track business-specific indicators. User engagement, transaction success rates, and feature adoption require domain knowledge to interpret. Dashboards visualize these metrics for different audiences from developers to executives.

Performance Testing and Load Analysis

Load testing simulates expected traffic patterns to verify system capacity. Stress testing pushes beyond expected limits to understand failure modes. Spike testing simulates sudden traffic increases. These tests reveal problems before production deployment.

Performance profiling identifies code-level bottlenecks. Memory leaks, inefficient algorithms, and resource contention become visible under load. Optimization focuses on measured bottlenecks rather than assumed problems.

Key Takeaway: Monitoring isn’t just about detecting problems; it’s about understanding system behavior under various conditions. The insights gained from comprehensive monitoring inform architectural decisions that prevent future problems. Invest in monitoring before you need it because when you need it, it’s too late to implement.

Conclusion

Managing high-concurrency mobile environments requires more than just adding servers. It demands architectural decisions that anticipate scale, implementation patterns that distribute load, and operational practices that maintain performance under pressure. Mobile app development services specializing in high-concurrency understand these requirements and build systems that scale gracefully.

The difference between apps that handle millions of concurrent users and those that crash under load isn’t luck or unlimited budgets. It’s thoughtful architecture, careful implementation, and continuous optimization. Every decision from database selection to caching strategy impacts scalability.

Success in high-concurrency environments comes from preparation, not reaction. The time to implement scalable architecture is before you need it. When traffic spikes arrive, successful apps handle them invisibly while others make headlines for all the wrong reasons.

With industry expertise of 15+ years across 5 continents and 10+ industries, Devsinc’s team of highly vetted IT professionals understand this which is why it makes them a top choice for businesses leading the digital front.